How I creatively tackled the long AI response latency

2023 · loading state design

Chegg is a leading online higher education platform with millions of subscribers. In 2023, it transformed its Q&A web app into an AI-powered chat experience. Before the streaming mechanism of AI responses was in place, users faced up to a 45-second wait, which had huge risk of user drop-off. I addressed this challenge successfully with creativity and strong logical thinking.

- Shipped

- Generative AI

- Motion design

My role

Sole UX designer

Skills

Motion design, logical thinking, cognitive science, diagramming

Duration

3 weeks

Core Team

UX designer (me), UX content designer, PM, Product marketing managers, Software engineers

Tools

Figma, FigJam

Context

We need a loading state to account for AI response latency

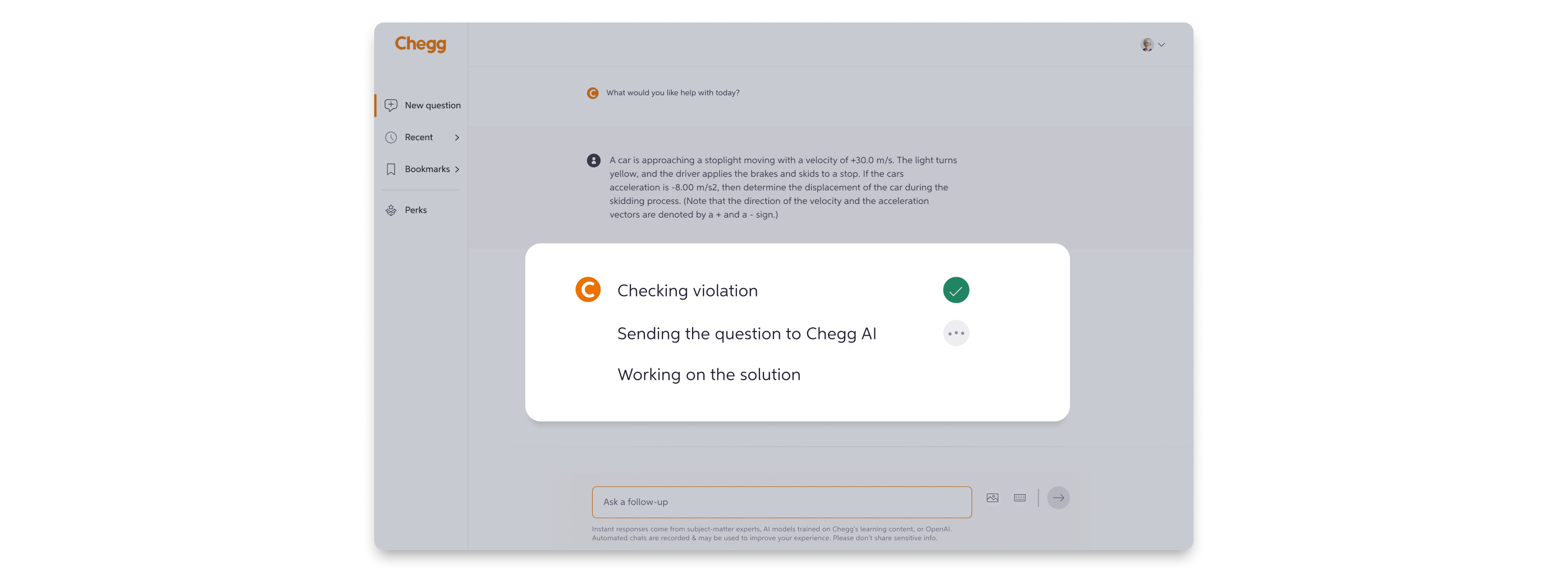

In Chegg’s new AI conversational learning experience, users would either instantly receive an expert solution from the archive or wait for an AI-generated solution from the Automation Engine, depending on which source provided higher quality for the specific topic. While answer streaming was a common way to mitigate long LLM response times, it required time to implement. In the interim, we needed a design solution to manage the delays and prevent user drop-off.

Challenges

The loading time can be extremely long and filled with uncertainty

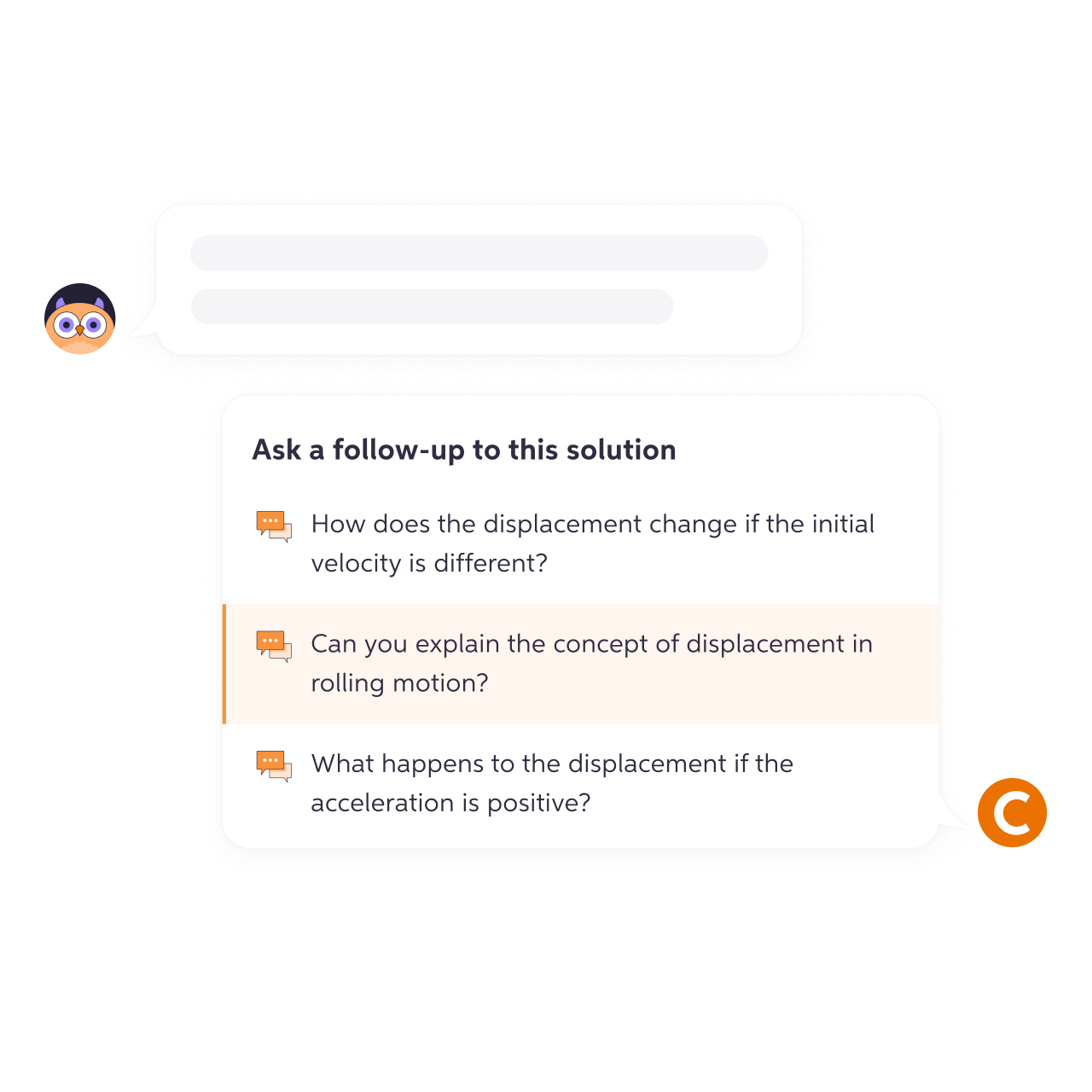

The first question that came into my mind was: how long does it take to get a response from the AI? This seemed like a straightforward question, but I realized it was far more complex than I anticipated when I posed it to my PM. To get an accurate answer, we needed input from five backend teams to run load tests and calculate the average time range.

Since understanding this was critical for brainstorming solutions, I took the initiative to find out the answer with the PM and engineers. I visualized the backend loading timeline, breaking down the intricate process to make the problem easier to address. This diagram was highly appreciated by stakeholders and significantly accelerated the process of understanding loading times.

After a week of collaborative effort, I finally had an answer: the loading time for most responses ranged from 3 to 45 seconds. This presented a significant challenge because, as the Nielsen Norman Group states,

"A 10-second delay will often make users leave a site immediately."

Here’s a summary of the main challenges we discovered:

Long latency

The loading time takes up to 45 seconds, far exceeding the 10-second threshold that typically keeps users on the site.

Uncertainty in loading time

We couldn’t estimate the exact loading time for each response when the system receives a question, leaving no clear way to inform users about how long they should wait.

Response failure

Not all responses could be generated within the 45-second limit, meaning there was no guarantee that users would receive an answer even after the long wait.

Ideation

How to keep users on the page?

Could we present a similar Q&A from the archive while the user waits for a response? This was my initial idea, but I soon realized it wouldn’t work. Previous UX research showed that users would leave Chegg and search other sites for solutions if the relevance wasn’t high. After discarding this approach, I started considering another question: how can we design the loading state to encourage users to wait longer?

To keep users engaged while they wait, there were two main approaches: first, we needed to explain why the delay was happening, and second, we should provide an estimated wait time. While this seemed straightforward in theory, the execution turned out to be much more challenging.

Messaging strategies

How do we communicate the loading process to users

If we were to tell users, "Once we receive your question, it's sent to a moderation service to check for academic violations, then the subject is detected. After that, the question is routed to the Automation Engine..." it would do nothing but adding confusion. I collaborated with the content designer and PMMs on messaging strategies, and we aligned on the following approaches:

Focusing on the value

The messages should communicate clearly that we're working on generating a personalized, high-quality solution that’s worth the wait.

Dynamically updating the messages

The messages should update periodically to show progress and set the right tone throughout the waiting process.

Design Iteration

It's all about the perception of the wait

The loader is a great way to keep users engaged by providing a sense of control and transparency. Ideally, it should show how much time is left for the loading process. However, in our case, an estimated loading time was unavailable, and I almost abandoned the idea of adding a loader.

While drawing inspiration from other products and brainstorming with engineers, I realized that the actual loading time isn’t as important as the perceived loading time. If the design can make users feel that the wait is shorter, then it’s a success.

Three-step loader

The first version of the loader I designed broke the loading process into three steps to make it feel more manageable. I worked with the engineers to see if the frontend could fetch backend status updates, and soon I discovered that it wouldn’t work because one step took the majority of the loading time, while the others took just one or two seconds.

Final solution - "Fake" loader

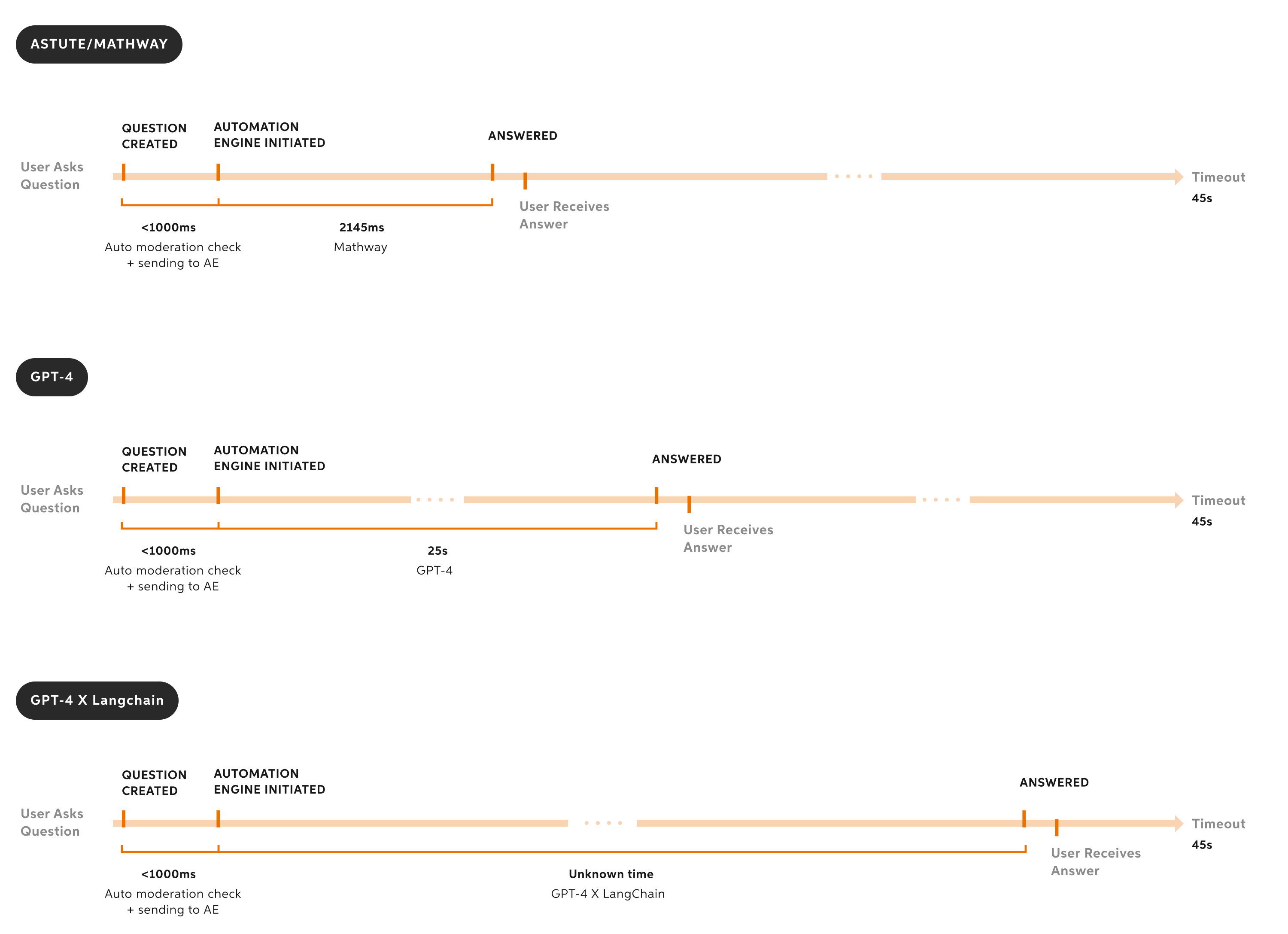

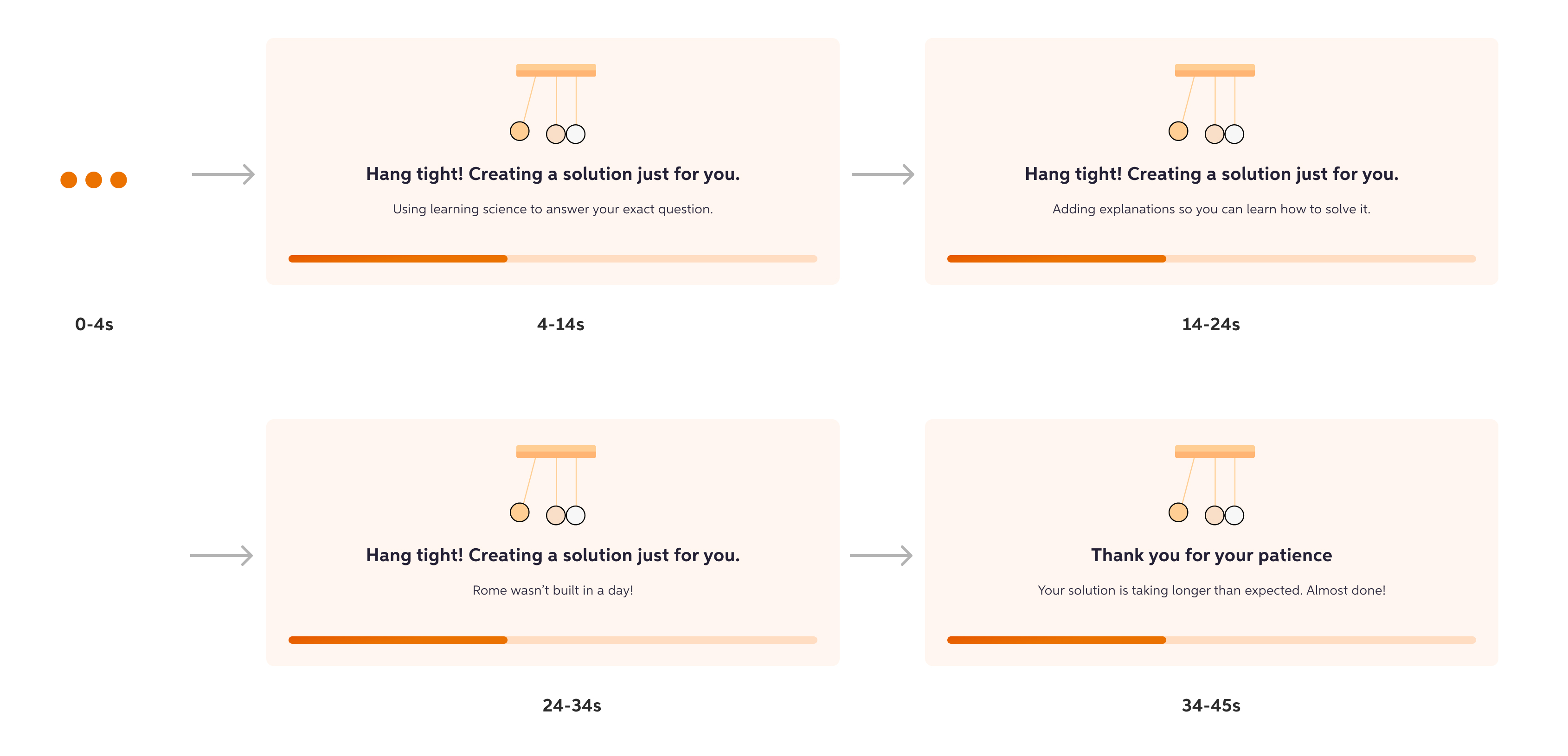

With no estimated loading time and no clear way to break the process into steps, I had to get creative in shaping the user’s perception. The loader relied on two tricks:

Keeping it moving

Keeping the loading animation in motion to signal progress, using a constantly moving progress bar and a motion graphic.

Sunk-cost effect

Using the sunk-cost effect to make it harder for users to abandon. This is achieved by quickly moving the progress bar for the first few seconds, giving the impression that most of the work is already done.

Here’s how the loader worked:

- The progress starts quickly and then slows to a consistent pace.

- Once the answer is ready, the progress jumps to 100%, signaling completion.

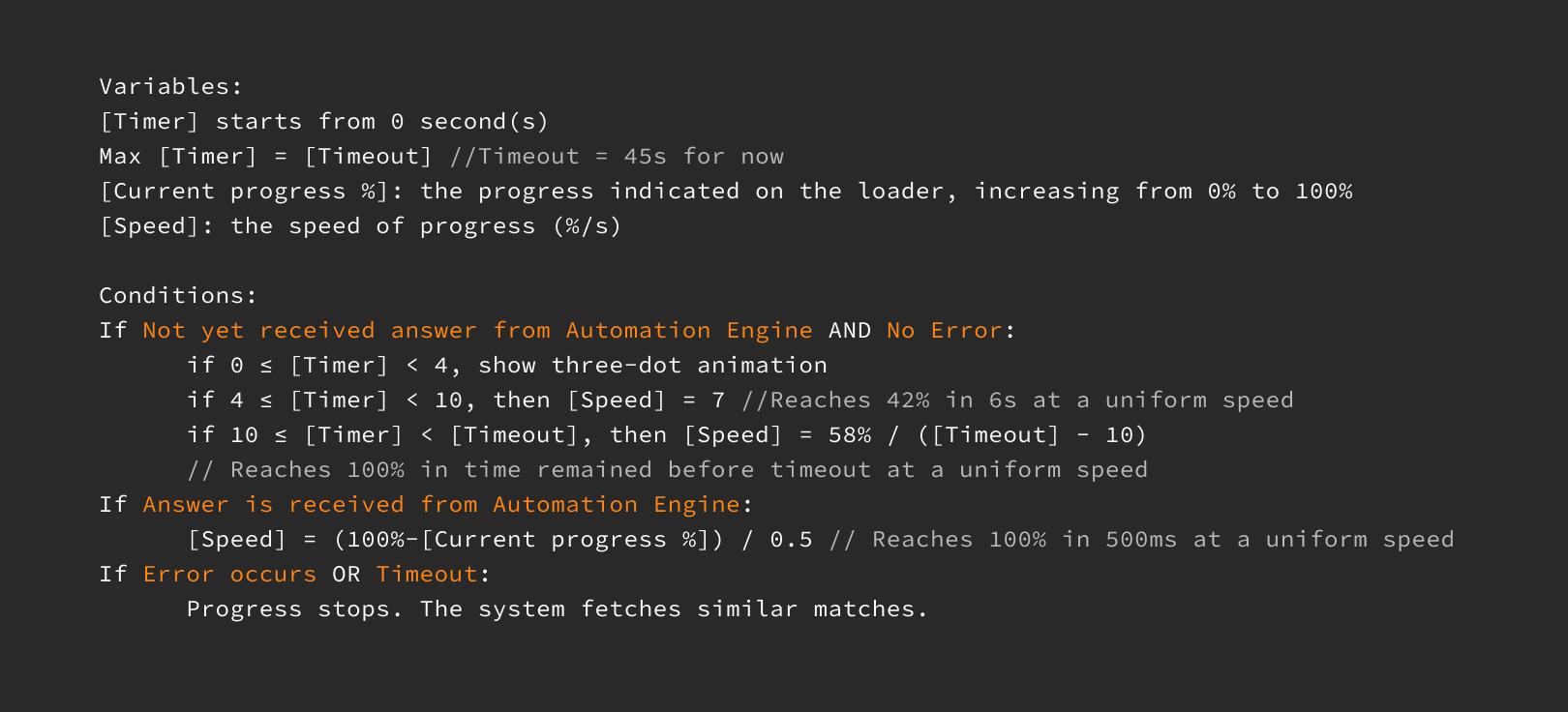

I translated this approach into "if/then" logic and developed formulas to calculate the loader’s progress, making it easy for engineers to implement. These formulas were also highly scalable, ensuring they could adapt to any changes in the timeout limit without requiring significant rework.

Edge cases

Designing for different scenarios

The big loading component could be an overkill for quick responses

While sharing my design with the UX team, I realized that a big loading component could create the perception of slowness for answers that are generated quickly. To address this, I decided to divide the loading process into two phases. For answers generated within 4 seconds, the loading animation would simply show a light-weight three-dot animation. After 4 seconds, the full loading component would appear. The 4-second threshold was based on the average time it takes for Mathway—the fastest model—to generate answers, typically within 10 seconds.

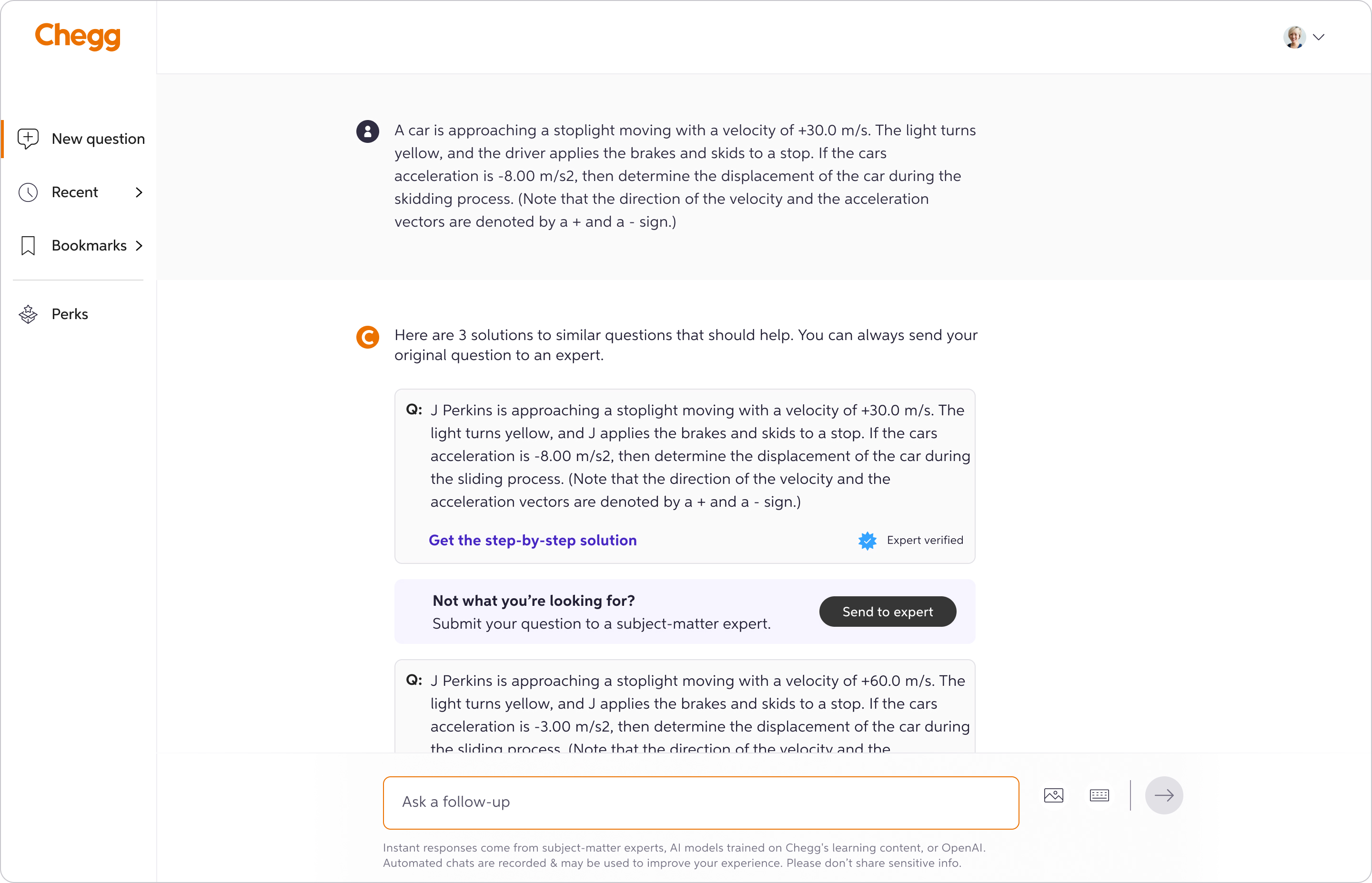

No answer state

Initially, the content designer and I considered setting the expectation that sometimes users might not get an answer even after waiting 45 seconds, with an apology message in case that happened. However, after discussing with the PM, we agreed that we didn’t want this edge case to negatively impact the perceived quality of the product. Instead, we opted for a more positive tone. As the 45-second timeout approached, the message would change to: “Your solution is taking longer than expected. Almost done!” This helped set the right expectations as the likelihood of no answer increased. If the system failed to generate a solution, we provided similar Q&As from the archive, offering something helpful instead of simply stating it was an error.

Outcome

Successfully preventing user drop-off

Without the loading state design, most users would likely abandon the site after 10 seconds of waiting. After launching the new AI learning experience with the loading design, there was no noticeable increase in drop-off rates.

Reflection

Utilizing visualization skills to enhance cross-functional collaboration

I initially created the loading timeline just for myself to understand the complex backend process. However, I soon discovered it was incredibly helpful for the PM and engineers to clarify loading times and communicate with the squads we depended on. As a designer, I once associated visualization only with wireframes, prototypes, and user flows. In reality, my true superpower goes far beyond these artifacts.